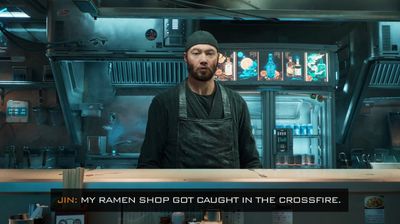

At Computex 2023 in Taipei, Nvidia CEO Jensen Huang just gave the world a glimpse of what it could be like when gaming and AI collide – with a graphically stunning rendering of a cyberpunk ramen shop where you can actually talk to the owner.

Seriously, instead of clicking dialog options, it imagines you could hold down a button, just say something in your own voice, and get an answer from a video game character. Nvidia calls it a “peek into the future of games”.

Unfortunately, the actual dialogue leaves a lot to be desired – maybe try GPT-4 or Sudowrite next time, Nvidia?

Here’s the whole conversation I hastily transcribed:

Player: Hey Jin, how are you?

Jin: Unfortunately not so well.

How is that possible?

I’m worried about the crime around here. It’s gotten bad lately. My ramen shop got caught in the crossfire.

Can I help?

If you want to do something about this, I’ve heard rumors that the powerful crime lord Kumon Aoki is wreaking havoc in the city. He may be the cause of this violence.

I will talk to him, where can I find him?

I hear he hangs out in the underground fight clubs on the east side of town. Try there.

OK, I’m going.

Be careful, Kai.

Watching a single video of a single conversation, it’s hard to see how this beats choosing from an NPC dialogue tree – but what’s impressive is that the generative AI responds to natural speech. Hopefully Nvidia will release the demo so we can try it ourselves and get some radically different results.

Screenshot of Sean Hollister / The Verge

The demo was built by Nvidia and partner Convai to promote the tools used to create the demo, specifically a suite of middleware called Nvidia ACE (Avatar Cloud Engine) for games that can run locally or in the cloud. The full ACE suite includes the company’s NeMo tools for deploying large language models (LLMs), Riva speech-to-text, and text-to-speech, among others.

The demo uses more than just that, of course – it’s built into Unreal Engine 5 with loads of ray-tracing… Right now, we’ve just seen much more engaging dialogue from chatbots, trite and distracting as they can be at times.

Screenshot of Sean Hollister / The Verge

In a Computex pre-briefing, Nvidia VP of GeForce Platform Jason Paul told me that yes, the technology can scale up to more than one character at a time and, in theory, even allow NPCs to talk to each other – but he admitted he hadn’t seen that that tested.

It’s not clear if any developer will embrace the entire ACE toolkit as the demo attempts, but STALKER 2 Heart of Chernobyl And Fortress Solis will use the part Nvidia calls “Omniverse Audio2Face” which attempts to match a 3D character’s facial animation with their voice actor’s speech.

Correction, 11:25 PM ET: It was Jason Paul of Nvidia, not Reverend Lebaredian, who answered my question. I regret the mistake.

Janice has been with businesskinda for 5 years, writing copy for client websites, blog posts, EDMs and other mediums to engage readers and encourage action. By collaborating with clients, our SEO manager and the wider businesskinda team, Janice seeks to understand an audience before creating memorable, persuasive copy.